RE: https://techhub.social/@Techmeme/116181844630974262

jim the office gif

RE: https://techhub.social/@Techmeme/116181844630974262

jim the office gif

🚀 I've just opened 2 new roles in my department at the Open Home Foundation to work full-time on #HomeAssistant!

🖥️ Frontend Engineer

🔐 Security Engineer

Fully remote. Full-time. #OpenSource every day.

Best job in the world. Working on open source for a non-profit, building the biggest smart home platform on the planet. It changed my life; your chance to change yours.

Boosts appreciated! 🙏

🔗 https://www.openhomefoundation.org/jobs

#SmartHome #Hiring #RemoteWork #FOSS #InfoSec

https://www.openhomefoundation.org/jobs

This is what junior security people think a USB charger at the airport is

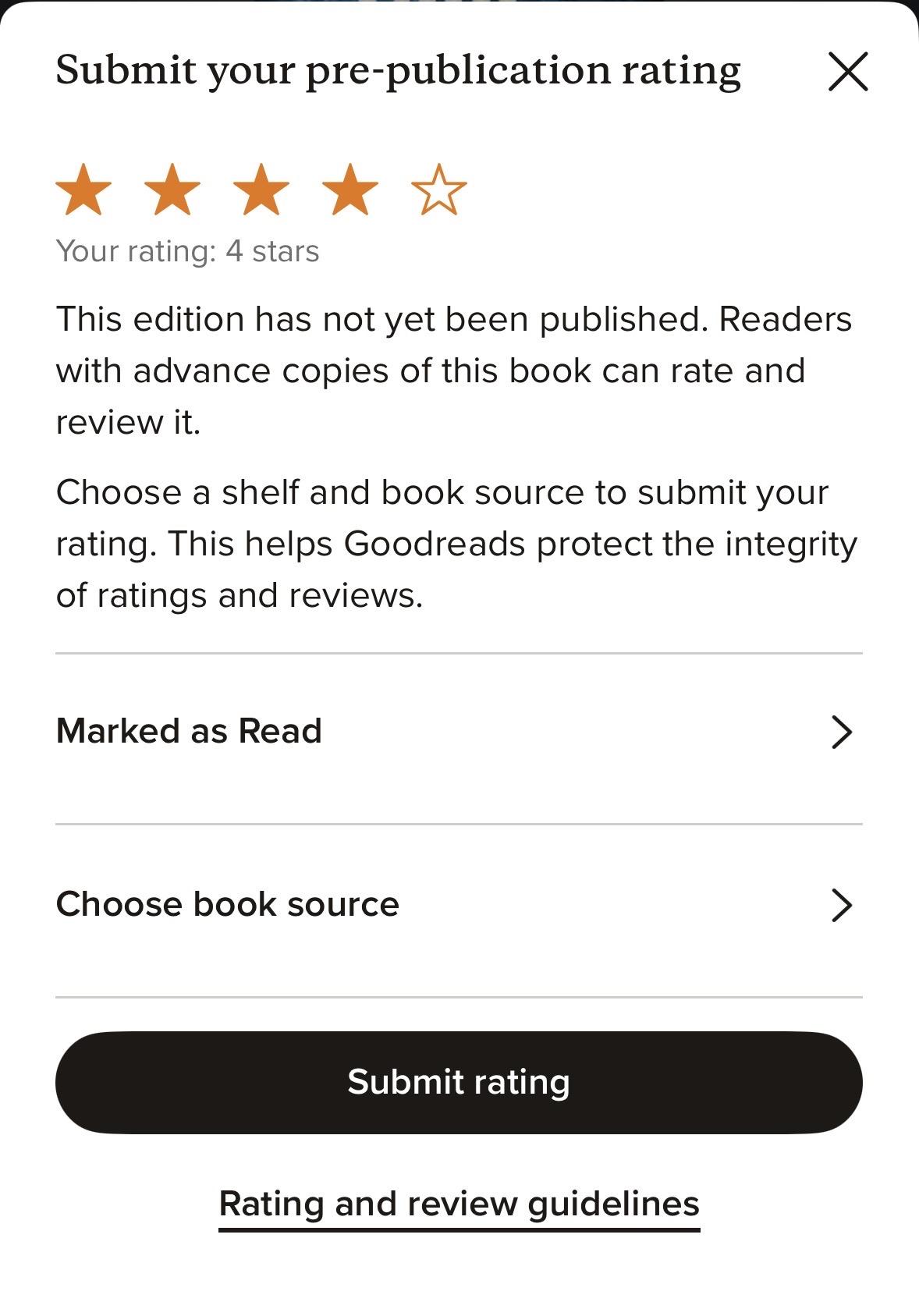

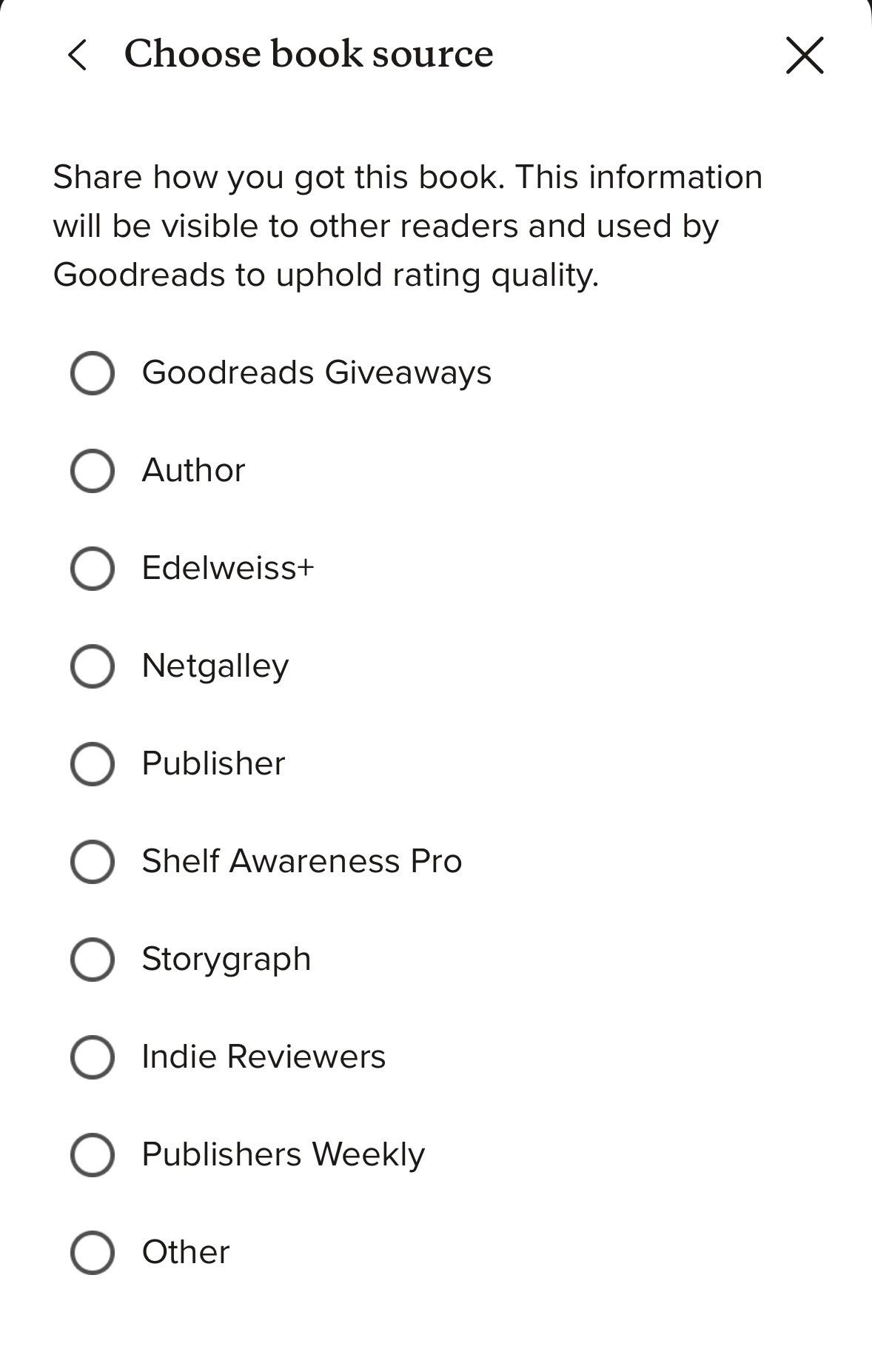

Just learned that when you rate / review a #book before the official publication date, #Goodreads asks has an extra approval process asking where you got the book from and then manually vetting what you say.

I get access to a bunch of Advanced Reader Copies (ARCs) through my #publishing job, but this is the first time I tried to publicly rate one 😅

Fun to see the behind the scenes!

#books

Nobody on LinkedIn has ever had a bad day. Every setback is a "growth opportunity." Every firing is a "new chapter." Every complete professional disaster is framed as "excited to announce." These people would describe the Titanic as "a bold pivot to submarine operations."